Delivering data projects in healthcare is a complex endeavor fraught with unique challenges that can make or break success. From navigating the stringent demands of HIPAA and guarding against sophisticated cyberattacks to overcoming the tangled web of interoperability standards between electronic health records and third-party platforms, and managing the overwhelming volume, variety, and velocity of healthcare data, each barrier requires strategic focus and expert coordination. Understanding three critical hurdles is essential for healthcare organizations aiming to transform raw data into improved patient care without compromising security or compliance.

This article explores these core barriers and offers practical insights on how to overcome them to bring calm to the chaos of healthcare data projects.

HIPAA and PHI/PII Standards: What Do They Mean for Data Analysis?

I don’t need to belabor how much HIPAA affects the decisions of healthcare organizations – it is the golden rule for anyone in or adjacent to healthcare. Even outside of healthcare, if you work in data, you have sat through yearly training videos regarding personal identifying information (PII) and personal health information (PHI) and how important it is to safeguard.

Moreover, HIPAA’s cybersecurity safeguards for electronic protected health information (ePHI) are evolving, with proposed new compliance requirements expected to drive substantial investments in updated technologies and processes like multi-factor authentication and advanced encryption protocols over the next five years. Healthcare organizations are anticipating compliance deadlines spanning from 2028 to 2030, along with the significant costs these upgrades will entail.

What does that mean for healthcare organizations looking to analyze data? Cybersecurity is paramount. You want to consider the reputation and size of the data platform provider you’re working with – you are more likely to want to work with someone like Microsoft’s analytics tools rather than a smaller company you haven’t heard of before. The flip side is that those “higher caliber” products are also likely to cost more.

Whichever data platform you select you should consider the following, per hhs.gov:

- System availability and reliability;

- Back-up and data recovery (e.g., as necessary to be able to respond to a ransomware attack or other emergency situation);

- Manner in which data will be returned to the customer after service use termination;

- Security responsibility; and

- Use, retention and disclosure limitations

Regardless of a data analytics platform’s reputation and pedigree, breaches are at an all-time high due to AI-driven attacks that are now customized to be sector-specific. Nine out of the ten of the biggest breaches in 2025 have been caused by hacking and IT incidents. Healthcare organizations need to be investing heavily in QA, penetration testers, and automation engineers to ensure that whatever connections they have set up between their EHRs and data platforms are secure and that once in the platform, the data is encrypted or masked whenever possible. Hipaavault.com has a great list of considerations and includes specific encryption standards for both data in transit and stored data.

We’ve had success here by relying on strong project management to keep the requirements top of mind while also supporting QA phases being added to the DataOps workflow. In a more traditional waterfall environment, the QA phase falls in naturally after development, but we’ve seen some organizations struggle if they’re taking a more Agile approach. Whether you expect the testing to be complete during the same iteration as the development or you divorce QA from development and give it its own iteration, the most important thing is consistency and a thorough review before releasing to production. We have found if our project managers coordinate reviews or release meetings it helps to ensure that nothing has been missed and also increases the transparency leadership has in what’s been done.

Interoperability: Challenges and Tools

I could talk about this topic for hours, but in short, it’s complicated. Over the past ten years, interoperability and collaboration between EHRs and third parties has improved due to the necessity of meeting HL7 standards, regulations like the 21st Century Cures Act, and a desire to enable healthcare organizations to meet a wide range of use cases. However, there are still a lot of hoops to jump through for those third-party data platforms to use an EHR as a data-source.

The first complication is just the sheer number of standards including ASC X12, DICOM, HL7 FHIR, HL7 v2, HL7 v3, IHE, NCPDP, various web services, and more. That’s a lot for anyone to keep track of, let alone understand deeply. This complexity becomes especially challenging when working with ASC X12, the standard format for most EDI transactions in healthcare — eligibility, claims, remittance, enrollment, and others. While widely used, X12 files are notoriously rigid and verbose, and parsing them reliably can be painful without the right tools.

In our recent work, we’ve leaned on Talend Data Mapper to accelerate EDI onboarding and normalization. Its ability to visually model and translate X12 segments into structured data – while handling quirks like nested loops, qualifiers, and segment repetitions – helps us build reusable integration components quickly. That means less time wrangling raw EDI files and more time delivering actionable data to downstream platforms.

Depending on which of the EHR’s applications you’re trying to work with, the architecture can be different. When I worked for a large EHR company as QA for their Claims and Remittance application, the endpoints were SOAP/MIME and as that is still mentioned on their website, I imagine it hasn’t changed. While usable, it’s an older architecture that isn’t as efficient or capable as newer methods like JSON. If all applications were consistent in using SOAP/MIME, we would just have to figure it out once and then set up the connections, but because each application has its own set of connections that means one could be using SOAP, another JSON, and another DSTU2. Each application has its own set up which makes it rather complicated and tedious for the team setting it up!

Another consideration for the data consumers of these dashboards is considering how often the data is refreshed. An EHR can “offer interfaces that are compliant with HL7, X12, DICOM, NCPDP, and other standards and can support interfaces that are either real-time or batch, one-way or bidirectional, point-to-point or mediated by an interface engine” but that means that depending on which application uses what, that data is refreshed only once a day, by batch, or in real-time. Luckily data analysts are most likely to be only looking at dashboards with data from a single application so the refresh rate should be consistent for each set of consumers, but it does add complication and nuance for the data engineers building it.

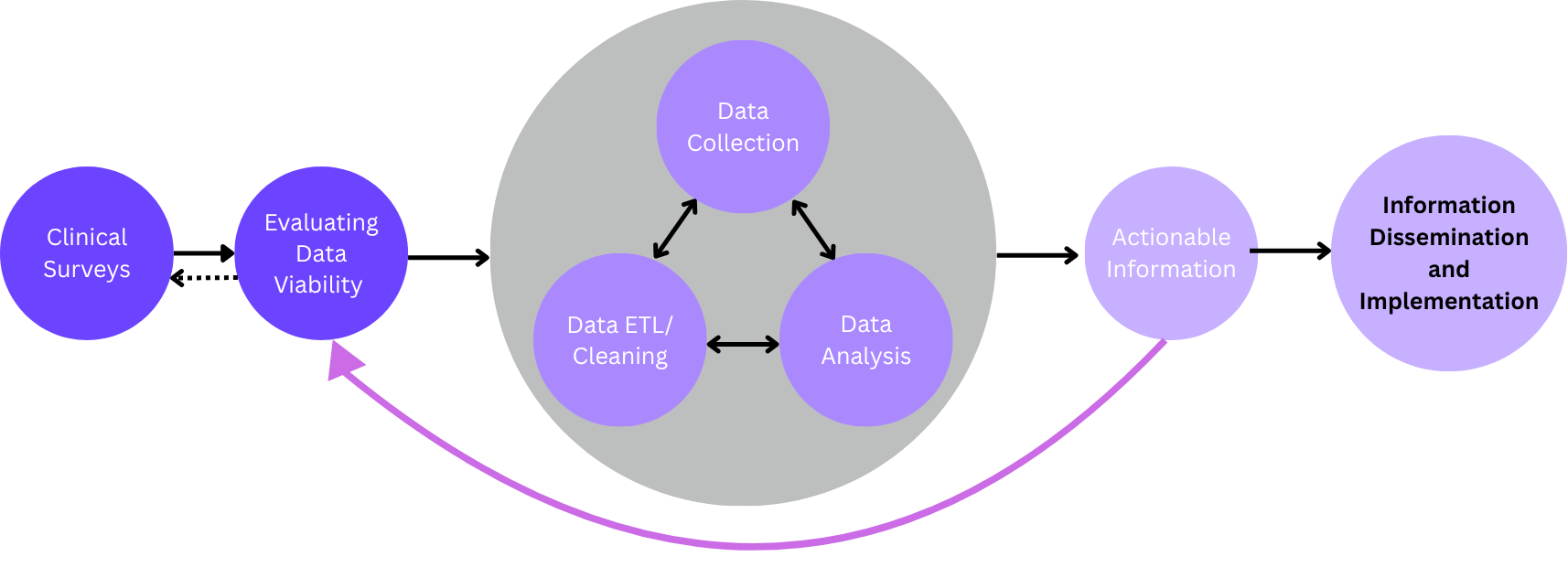

Big Data: The Big, Important Buzzword

While healthcare organizations have unique considerations and use cases for their data as well as an absolutely astounding wealth of data, the core issues they have during the architecture phase are the same issues every organization has. That means there’s a lot of expertise out there to draw on! In the visualization below, you can think of everything in the gray circle as the universal issues every organization tackles, and the parts of the workflow outside of that circle as unique to a specific healthcare use case regarding health questionnaires. While other organizations may use a similar workflow for user interviews, the use cases for healthcare are unique due to their clinical application.

These universal issues include gathering, cleansing, and consolidation of data from different source systems to create a master record, in this case a master patient record. This is something that every organization must perform when creating a data warehouse as every record must have a unique primary key (your MRN) and it’s a struggle for healthcare orgs. In fact, A. Lamer et al. found the complexity of the raw data and the ETL process represent significant barriers to successfully setting up a data warehouse. While it can be painful, there are a ton of resources and experienced data engineers who are able to do this work.

The ETL and standardization of data needs to be done simply because you cannot have duplicate records in or between healthcare organizations. It would be like having two people with the same Social Security Number – think of all the issues that could arise in a hospital setting due to a mistaken identity! That aside, once we have a cleansed and centralized repository of data that includes all the possible data sources for a patient, it enables impactful use cases for population health, epidemiology, and other areas.

Accounting for hundreds to thousands of data sources

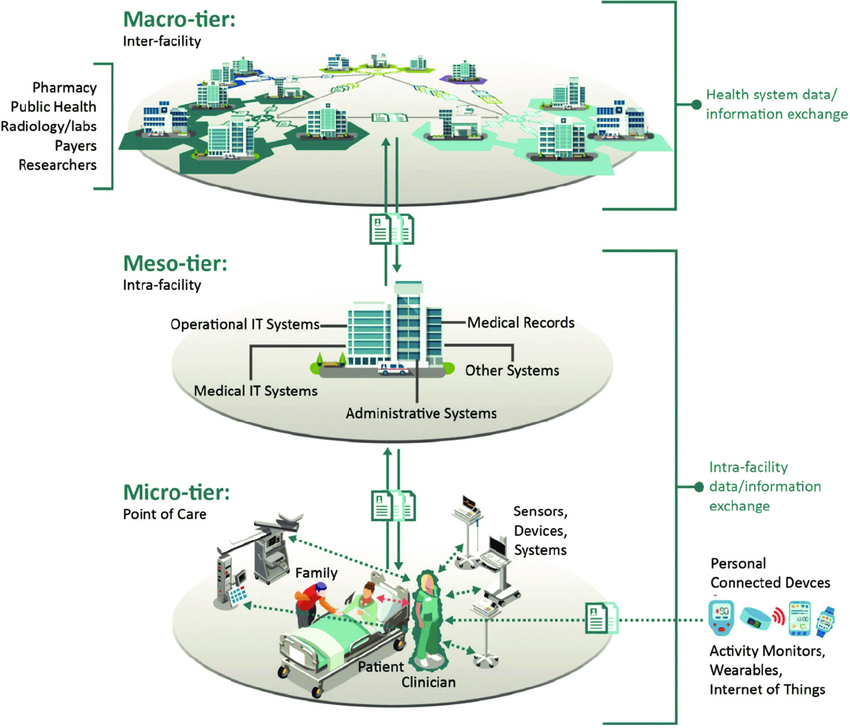

One thing that is unique to healthcare is the sheer amount of data sources we need to account for. Consider the visualization below:

EHRs have gone a long way to connecting the micro, meso, and macro-tiers within their applications, but we now must consider personal devices as well, and the number of integrations is absolutely exploding in the industry. This adds a significant amount of complexity to the data we want to include in our master patient record. Due to the number of sources and the type of data we’re working with (streaming vs. batch, the variety of possible connections and formats, etc.), depending on the size of your organization, we’re flirting with Big Data territory.

Big Data is characterized by its volume, variety, and velocity, and we have all three of those factors at play. What does that mean? Not only does your platform need to be able to meet HIPAA laws and interoperability requirements, but it also needs to be capable of handling Big Data without becoming overloaded. There’s a certain expectation of data availability that needs to be met, and if your dashboards take 30 minutes to load, that’s an issue. Still, many large organizations must deal with the same, so there are still lots of possible solutions, but I don’t want to belittle the fact that it’s yet another layer of complexity in an already complex data environment.

One tool we use that integrates well with Talend Data Mapper is Talend Cloud with Apache Spark. We’ve found that its parallel processing is powerful and fast enough that it can empower real-time analytics of large volumes of data, including streaming and IoT sensor data.

What to Do Once You’ve Got the Tools

Outside of ensuring that you have the right tool for the job, this is also a good opportunity to empower strong program and project managers to track the dependencies and interplay between these areas and are able to look at the work from different angles.

One is from the user perspective:

- What are the different workflows we’re trying to enable for our data consumers?

- What data from which sources do we need to accomplish that?

- Who is doing that work and is it visible to the other teams who will build on it?

The second angle is the technical dependencies and how they should be mapped:

- What are your data sources and what ETL needs to happen to normalize that data?

- Are there different teams managing data engineering, ETL, and building dashboards?

- Are these teams being communicated with effectively to know when they’re unblocked?

When you can marry these angles to consider the work from both the “start” and the “finished product”, magic happens, and work starts flowing. Everyone can understand what they’re doing, why, and who to go to with the right questions. With this broad data landscape, you need a team of people to ensure that communication is on point, so data teams are enabled to focus on delivering what they’re best at: actionable insights.

Bring Calm to Your Chaos and Become a Healthcare Data Star

We understand the layers of complexity presented by data in healthcare orgs as we have a long history of integrating with EHRs to develop data dashboards for our clients.

Let us help you sort through the chaos unique to your organization to deliver the best possible solution as painlessly as possible. Drop us a line and we’ll reach out.